Seven Guidelines to Ensure Ethical AI

The organisation of tomorrow will be built around data, and it will require artificial intelligence to make sense of all that data. Artificial intelligence is a broad discipline with the objective to develop intelligent machines.

AI consists of several subfields: Machine learning (ML), a subset of AI that enables machines to learn from data. Reinforcement learning, which is a subset of ML and focuses on artificial agents that use trial and error to improve itself. And deep learning, also a subset of ML that aims to mimic the human brain to detect patterns in large datasets and benefit from those patterns.

Artificial intelligence has been around since the 1950s, thanks to the work of Alan Turing, who is widely regarded as the father of theoretical computer science and artificial intelligence. Back then, Alan Turing already dreamed of machines that would eventually “compete with men in all purely intellectual fields”.

In the past years, we have come to a lot closer to Turing’s dream. Thanks to billions of dollars in research spent by tech giants such as Google, Facebook, Tencent, Baidu, Apple and Microsoft, there has been increased attention on AI. Resulting in a variety of ever-more intelligent AI applications in almost every domain.

The EU and AI Ethics

With the attention for AI growing, also the call for ethical AI is growing. This is not surprising seeing the many problems we have encountered already. In 2016, Cathy O’Neil already pointed out, in her book Weapons of Math Destruction, the problems that can arise when we rely too much on unaccountable AI. These problems exist due to biased algorithms that are trained using biased data and developed by biased developers.

Earlier this month, the AI Now Institute warned that due to a lack of diversity within the AI development community, AI is at risk of preserving historical biases and power imbalances. Already, the overwhelmingly white and male field has resulted in problems such as image recognition services that offend minorities, racist chatbots and technology that cannot recognise users with dark skin colours.

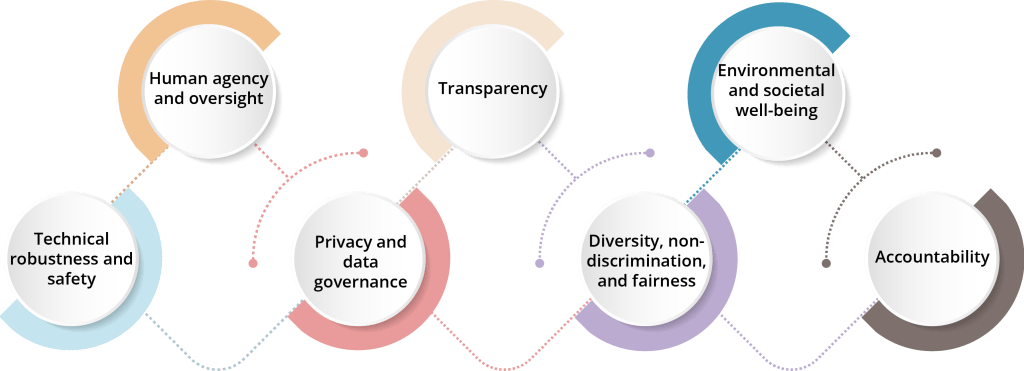

High-quality, unbiased data combined with the right processes to ensure ethical behaviour within a digital environment could significantly contribute to AI that can behave ethically. Since this is difficult to achieve, the European Union published a set of guidelines on how to develop ethical AI. The seven guidelines aim to limit the existing problems with AI.

Figure 1: EU guidelines ethical AI

In brief, these guidelines include:

- Human agency and oversight – AI should empower humans, but humans should control AI;

- Technical robustness and safety – AI should be safe and prevent (un)intentional harm;

- Privacy and data governance – AI should respect user privacy and ensure adequate data governance;

- Transparency – AI should not be a black box and be able to explain itself in plain language;

- Diversity, non-discrimination and fairness – bias should be prevented, and diversity encouraged;

- Societal and environmental well-being – AI should benefit all of humanity and be sustainable and environmentally friendly;

- Accountability – AI should be responsible and accountable.

If you want to download the full report on ethical AI guidelines, click here.

The objective of these seven AI guidelines is to create trustworthy AI that is lawful (respects all applicable laws and regulations), ethical (respects ethical principles and values) and is robust (from a technical and social perspective). Hopefully, these seven guidelines will help the AI community in ensuring further development of ethical AI. Not only in Europe, but across the globe, since AI does not know borders.