OpenAI Launched GPT-4o: The Future of AI Interactions Is Here

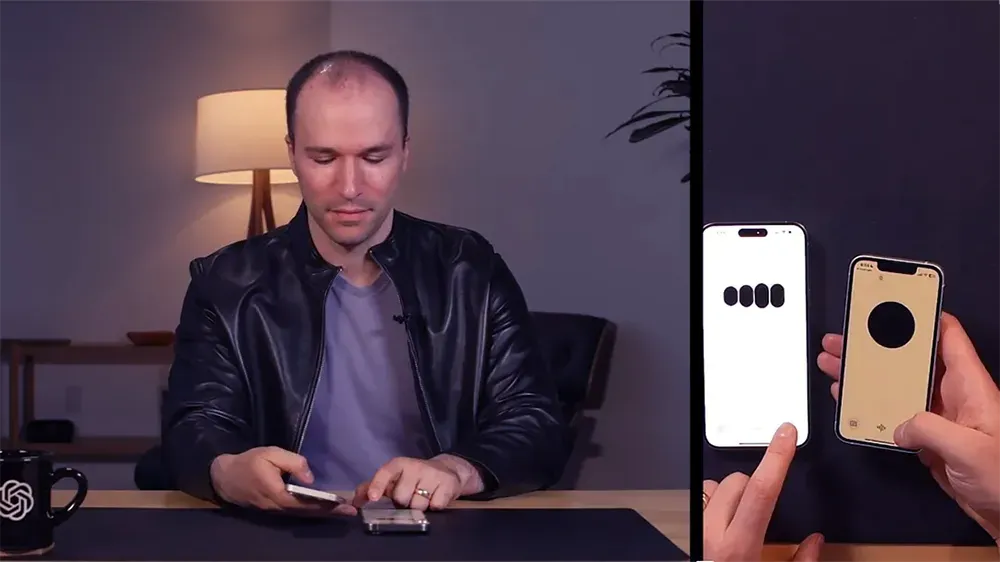

OpenAI just launched its next model: GPT-4o (“o” for “omni”), and according to OpenAI, it promises to revolutionize human-computer interaction with real-time capabilities across text, audio, and vision. This “omnimodel” responds as swiftly as humans and can seamlessly transition between tasks. It promises to elevate ChatGPT into a versatile digital assistant, capable of real-time conversations, visual problem-solving, and emotional intelligence.

The model is twice as fast and half the price of its predecessor, making advanced AI accessible to all users. I merges capabilities across text, audio, and vision into a single model, enabling it to process and respond to inputs in real-time. With an average response time of 320 milliseconds, GPT-4o operates at nearly human speed, setting a new standard for AI responsiveness and interaction fluidity.

This latest iteration merges capabilities across text, audio, and vision into a single model, enabling it to process and respond to inputs in real-time. With an average response time of 320 milliseconds, GPT-4o operates at nearly human speed, setting a new standard for AI responsiveness and interaction fluidity.

GPT-4o achieves state-of-the-art performance on visual perception benchmarks and dramatically improves speech recognition across all languages, particularly those with fewer resources.

For businesses, this translates to a myriad of opportunities. The enhanced capabilities of GPT-4o can streamline customer service, making interactions more natural and efficient. Companies can deploy AI that understands context, tone, and even emotions, leading to more satisfying customer experiences. Real-time translation and multilingual support mean businesses can engage with a global audience effortlessly, breaking down language barriers and expanding market reach.

In sectors like education and training, GPT-4o’s ability to provide real-time, interactive learning experiences could revolutionize how knowledge is disseminated and absorbed. Imagine AI tutors that provide instant feedback and adjust their teaching methods based on the learner's emotional state and comprehension level. This personalized approach can enhance learning outcomes and keep students engaged.

The integration of vision capabilities means GPT-4o can assist in fields requiring visual analysis, such as healthcare, engineering, and design. It can interpret medical images, assist in diagnostics, or help design intricate products, ensuring precision and reducing human error. The ability to reason through visual problems in real-time opens new avenues for innovation and efficiency.

However, this rapid advancement also raises concerns. As GPT-4o becomes more integrated into our daily lives, there is a risk of over-reliance on AI, potentially eroding critical thinking and interpersonal skills. The ethical implications of AI systems detecting and responding to human emotions also need careful consideration. Privacy issues could arise from AI’s ability to process and interpret personal data, making robust safeguards essential.

GPT-4o offers transformative potential for businesses and society by enhancing productivity, efficiency, and global connectivity. Yet, it also necessitates a balanced approach to ensure that while we harness its capabilities, we remain vigilant about the ethical and social implications of increasingly sophisticated AI systems.

Read the full article on OpenAI.

----

💡 We're entering a world where intelligence is synthetic, reality is augmented, and the rules are being rewritten in front of our eyes.

Staying up-to-date in a fast-changing world is vital. That is why I have launched Futurwise; a personalized AI platform that transforms information chaos into strategic clarity. With one click, users can bookmark and summarize any article, report, or video in seconds, tailored to their tone, interests, and language. Visit Futurwise.com to get started for free!