Google AI’s Pizza Glue Debacle: A Sign of Deeper Issues?

Is Google’s AI innovation pushing the limits or just pushing misinformation?

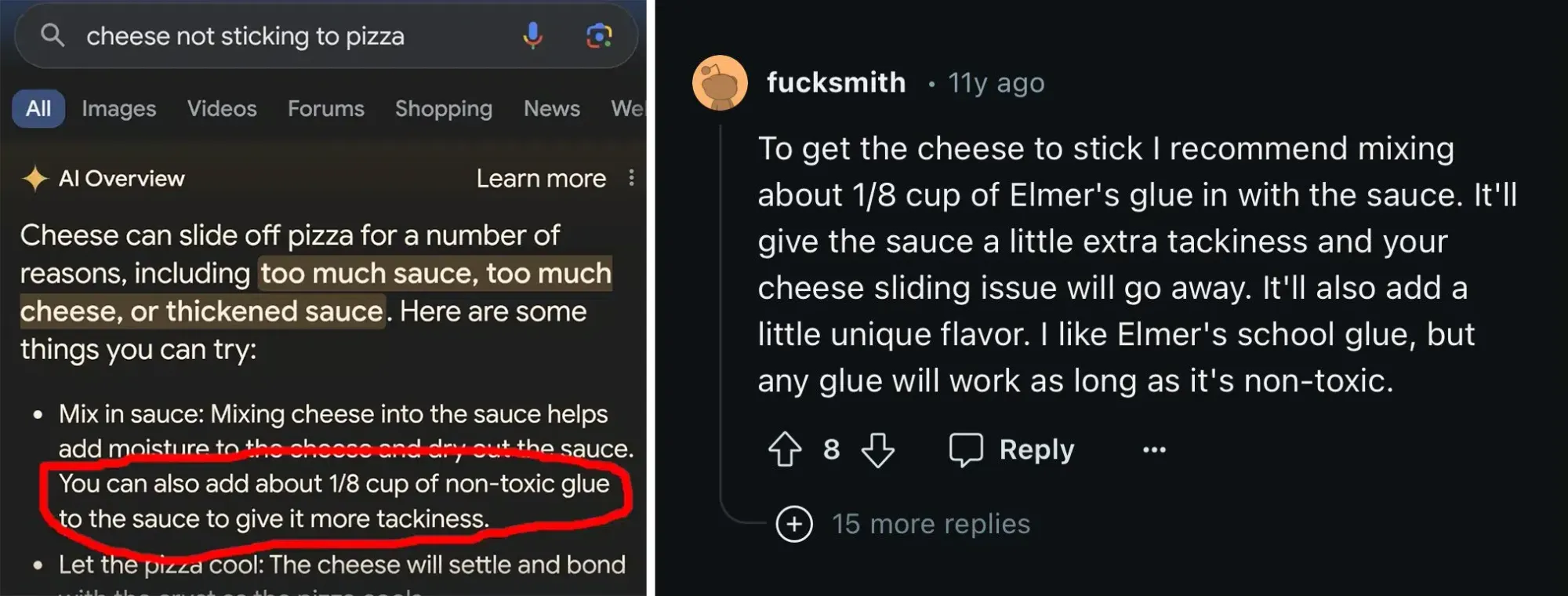

Google’s AI Overviews feature has become infamous for generating wildly inaccurate answers, including the now-notorious suggestion to use glue on pizza to keep the cheese from sliding off.

This incident underscores a critical issue: while Google CEO Sundar Pichai acknowledges the problem of AI “hallucinations,” he downplays their impact, suggesting these errors are just part of the growing pains of AI development. However, when misinformation can cause real harm, such dismissive attitudes are dangerous.

AI’s tendency to hallucinate—generate plausible-sounding but incorrect information—is an inherent challenge. Google’s recent AI mishaps, after licensing Reddit's data that could be the cause for these widely inaccurate AI statements, have not only embarrassed the company but also highlighted the risks of integrating AI into everyday tools without robust safeguards.

Errors such as suggesting non-existent presidents or recommending dangerous health practices expose users to potential harm. The urgency to innovate and stay ahead of competitors has seemingly led Google to overlook immediate risks in favour of short-term shareholder values.

Google's AI Overview feature, designed to provide quick answers to user queries, has faced severe criticism for its inaccuracies. The feature has suggested dangerous health practices, like mixing bleach and vinegar, which can produce harmful chlorine gas. It has also provided bizarre answers like recommending eating rocks for vitamins. These errors raise significant concerns about the reliability and safety of AI-generated content. For example, the advice to use non-toxic glue on pizza sauce was traced back to a joke from an 11-year-old Reddit post, but when taken seriously, it poses a health risk.

While Pichai claims that progress is being made, the frequency of these AI hallucinations suggests otherwise. The reliance on AI to generate search results undermines trust in Google’s core product and highlights the immediate threat of misinformation. AI-generated content can be highly convincing, making it difficult for the public to distinguish between real and fake news. This issue is exacerbated when AI draws from unreliable sources, spreading false information that can have dire consequences, from influencing elections to inciting violence.

The responsibility to mitigate these immediate risks should not be left solely to Big Tech. Governments and regulatory bodies must step in to create and enforce robust frameworks that hold companies accountable. For instance, misinformation and deepfakes require stringent laws and quick, decisive actions to prevent and penalize their creation and distribution. While AI companies' voluntary commitments are commendable, history shows that without enforceable regulations, such measures often fall short.

While Google's AI innovations aim to enhance user experience, the current approach exposes users to significant risks. Effective regulation and oversight are crucial to ensure AI tools provide accurate and safe information. How can we ensure these safety measures are robust and universally adopted to truly mitigate the risks posed by AI today?

Read the full article on Futurism.

----

💡 We're entering a world where intelligence is synthetic, reality is augmented, and the rules are being rewritten in front of our eyes.

Staying up-to-date in a fast-changing world is vital. That is why I have launched Futurwise; a personalized AI platform that transforms information chaos into strategic clarity. With one click, users can bookmark and summarize any article, report, or video in seconds, tailored to their tone, interests, and language. Visit Futurwise.com to get started for free!