Escape the AI Dystopia: Master Digital Awareness for a Better Future

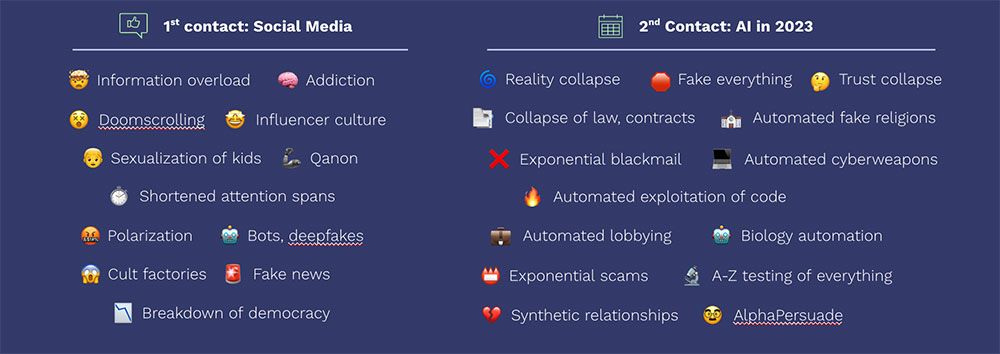

For the past 35 years, we have sleepwalked into the digital age, and us not being digitally aware has resulted in a range of problems. If we thought that the polarisation, manipulation, and misinformation of the social media era were bad, it will be child's play compared to what we can expect in the AI era, where technologies such as AI, quantum computing and the metaverse will converge, creating exponentially more polarisation, manipulation, and misinformation. As Tristan Harris said in a 2023 talk called The AI Dilemma, “Without wisdom, without a deep, global understanding of advanced digital tools, the problems our species faces will aggravate exponentially as we move from the age of social media to the age of AI”.

Already, ChatGPT is used for misinformation campaigns in Venezuela, for phishing and malware attacks, and in early 2023, ChaosGPT was created to destroy humanity, while WormGPT will allow cybercriminals to launch sophisticated cyber-attacks, and this is only the beginning. Moreover, generative AI is flooding the internet with low-quality but very convincing content, further contributing to information overload, and making it more challenging for consumers to know what is real or fake.

Big Tech has declared war on all smaller players on the web, with OpenAI harvesting copyright materials to train their models and Google even declaring that the entire internet is fair game for its AI projects. At the same time, generative AI poses an existential threat to high-quality media, with global search engines embracing a Generative Search Experience, resulting in loss of organic traffic via search engines, and hence, advertising revenue.

While we now have very advanced machine learning models, and some even believe we are getting close to artificial general intelligence, the models are often optimised to benefit the shareholders of the companies who created them and not society as a whole. In this process, platforms are created to increase time on site without considering the needs of the users and a tiny elite aims to control vast amounts of wealth.

We must approach these new digital technologies, including AI, the metaverse and quantum computing, with great caution and consideration to avoid the pitfalls of moving too fast and breaking things, as we've seen with social media. Instead of repeating past mistakes, let's harness the power of these technologies to create a future that benefits humanity as a whole, not just a select few.

To achieve this vision, we must become digitally aware. We must wake up from doom-scrolling TikTok videos, and we must read the instruction manuals, if they existed, of these very powerful tools, especially when it involves children. Yes, we are all busy leading our lives, but this is something so fundamental that we have to look up, we have to become aware of what’s happening and where we are going, because if we are not looking up, if we are ignoring the signals that our society is on a path of destruction, how will we be able to protect the future generation?

Becoming Digitally Aware

To become digitally aware, it is vital to have access to the latest knowledge covering these technologies. However, most of the world’s content on innovation is in English, while only 1.5 billion people speak English, leaving 6.5 billion people to leverage not the latest information available. Moreover, most of the information is written to target readers who already have some sort of understanding of the field they are interested in, making it challenging for beginners who do not speak English to enter a new field. Not only that, to stay at the cutting-edge of innovation, you need to be in the know where to find that information, giving an unfair advantage to those who know where to source high-quality information.

To overcome these challenges, the crowd relies on social media to find their way in the information overload. Unfortunately, the algorithms on social media determine what the crowd reads, which is tailored towards negative, polarising content as that is what delivers the most ad clicks. By maximising user engagement, big tech and media companies profit off outrage and division according to Prof. Galloway.

They thrive on provocative content that sparks online arguments rather than more neutral facts and discussion. This has taken a mental health toll, especially on youth, and undermines social cohesion needed for action on shared challenges. As such, we need to find a way to circumvent the social media algorithm to deliver a positive message to the crowd and give them access the knowledge needed to understand our rapidly changing world.

We can become digitally aware by focusing on three key areas: education, verification, and regulation.

Education

Firstly, concerning education, the emphasis must be on globally disseminating knowledge about the technologies shaping our daily experiences. It's insufficient to merely incorporate AI into educational frameworks, as ethically sound as that might be; the real issue extends to elevating global digital literacy.

While it may be tempting to consider today's generation as digital natives, we must acknowledge a critical distinction: digital nativity does not inherently bestow digital awareness. Navigating the myriad ethical and societal complications emerging from AI adoption necessitates a thorough understanding of the ramifications of our digital activities and a keen awareness of data rights.

To actualise this objective, our educational investments must go beyond imparting basic technological skills. Education should delve into the ethical, societal, and political aspects of technology usage. This multidimensional approach empowers individuals to make well-informed decisions in their interactions with AI and digital environments. Given that children are exposed to these technologies from a young age, such educational initiatives should commence early in their developmental stages.

By nurturing a pervasive culture of digital awareness, we can arm individuals with the essential knowledge and tools to exercise data sovereignty. This enables them to demand transparency and accountability from Big Tech and to actively contribute to the formulation of a responsible and equitable digital future.

Verification

Secondly, let's address the issue of verification. With the increasing ubiquity and influence of AI in diverse aspects of life, the urgency to develop reliable methods for verifying the operations, intentions, and outputs of these systems cannot be overstated. This demands the creation of intricate systems and tools capable of effectively distinguishing AI-generated content and ascertaining the genuineness of digital identities in an ever-changing online ecosystem.

Consider the growing phenomenon of deepfakes, hyper-realistic digital replicas that can convincingly mimic human identities. I've experimented with creating such deepfakes, and if this capability is broadly accessible, it presents pressing issues around identity verification. Whether one is interacting through voice, video, or within the realms of the metaverse, the ability to confirm the authenticity of the individual on the other end is critical for maintaining trust and credibility in digital interactions.

Admittedly, this represents a formidable technical hurdle. Current limitations in AI detection technologies—acknowledged even by pioneers in the field like OpenAI—mean that existing solutions are far from foolproof. But the stakes are too high for complacency. Living in a world where facts are easily manipulated and identities effortlessly falsified could plunge us into a post-truth society, an outcome with grave societal implications.

Given these realities, the onus is on us to concentrate our research and development endeavours on surmounting this challenge. It will likely involve a multi-disciplinary approach, combining expertise from fields such as cryptography, machine learning, cybersecurity, and ethics, among others. By investing in such comprehensive research efforts, we stand a better chance of developing systems capable of effective verification mechanisms. These would be integral to ensuring the digital realm remains a space of trust and factual integrity, thereby guarding against the dystopian vision of a post-truth society.

Regulation

Lastly, let's focus on the matter of regulation. Despite noteworthy advancements in regulatory frameworks emerging from regions like the European Union, China, and the United States, the speed of bureaucratic processes leaves much to be desired. By the time regulations catch up, considerable harm can already be inflicted. Therefore, I believe we must take a different approach.

A practical way to govern the societal impacts of AI would be to establish a dedicated regulatory body, akin to the U.S. Food and Drug Administration (FDA). Just as the FDA rigorously scrutinises drugs for safety and efficacy in relation to our physical well-being, a similar agency could be responsible for evaluating AI technologies that have the potential to affect mental health, cognition, and decision-making. The forthcoming AI Act in Europe presents a commendable starting point, but the global reach of AI demands that this sort of regulatory framework be implemented on an international scale.

Further, it is imperative that companies engaged in AI development institute ethics boards endowed with substantial authority. Such boards should not be mere figureheads; they must wield decisive power in influencing AI development projects. Constituted by a diverse panel of experts—ethicists, engineers, social scientists, and community representatives—the boards should have the mandate to establish guidelines, monitor the developmental phases, and even discontinue projects that fall short of ethical and safety benchmarks.

By implementing these features into the regulatory landscape, we can fortify the decision-making processes around AI development. This proactive stance towards regulation is essential for mitigating the risks and ambiguities tied to rapidly advancing AI technologies, and, crucially, for ensuring that these innovations are developed and deployed in a manner that serves the greater good of society.

Final Thoughts

Achieving the outlined solutions is entirely within reach, provided there is a concerted effort across humanity. As stakeholders in this transformative era, it's imperative to establish a sustainable global culture that places a premium on ethical AI development. This cultural shift should adhere to principles of transparency, accountability, and collaboration, extending across all industry sectors and governmental bodies.

Over the past decade, my involvement has been centred on understanding the complexities and capabilities of these groundbreaking digital technologies. Although my expertise does not lie in software development, data science, or machine learning, the necessity to comprehend the inner mechanics of these technologies extends beyond the boundaries of technical roles. In an era where our professional and personal lives are increasingly intertwined with these technologies, it is of foundational importance that we elevate our collective awareness. If we fail to acknowledge the broader implications and potential pitfalls that these technologies present, we risk compromising the well-being of future generations.

The potential utility of artificial intelligence is expansive, carrying significant implications for both societal progress and disruption. The responsibility to channel this potential constructively rests with us. Decisions made today will set precedents, establishing either robust protective measures or laying the groundwork for adverse consequences. Through judicious decision-making and the implementation of comprehensive safeguards, it is within our collective capability to construct a future that utilises AI to enrich human life, solve intricate challenges, and serve the greater good of society.