Embracing the Future: The Rise of Superintelligence

A few weeks ago, Elon Musk, not shy of making predictions, predicted that the world would see the rise of Artificial Superintelligence (ASI) by the end of 2025, several years earlier than what he predicted in 2023. Given Elon's track record of making predictions, we should take this with a (large) grain of salt, but as a futurist, I am confident we will achieve ASI in the next decade.

The moment when AI outstrips human cognitive prowess, ushering in an era of artificial Superintelligence, is also known as the singularity. It will herald a paradigm shift in technological evolution, presenting both unprecedented possibilities and profound perils. As we move towards this event horizon, the elephant in the room is whether we are equipped to navigate uncertainties and harness the potential of AI responsibly.

With the rapid developments in and convergence of emerging technologies, superintelligent AI appears increasingly tangible. The journey has been marked by relentless innovation, from the emergence of basic algorithms to the refinement of neural networks. As AI advances, it can help find breakthroughs that will bring us closer to ASI.

As we edge closer to realising the potential of Superintelligence, a plethora of profound ethical, security, and socio-economic challenges emerge that could reshape the very fabric of human society. But what does ASI actually mean, and how will it impact society? Let’s dive into the concept of Superintelligence.

The Evolution Beyond Human Constraints

Before diving into ASI, I think it is essential to understand that artificial intelligence will fundamentally differ from human intelligence. As a consequence, it will be a lot harder to understand once we achieve ASI, as its rules of engagement are different:

Human intelligence, as complex and profound as it is, is bound by our evolutionary heritage's physical and biological constraints. Factors like food availability and physiological trade-offs have prevented our brains from reaching what could be considered an optimal state of intelligence.

In addition, human intelligence is created by a dumb process: evolution runs on trial and error and does not contain the foresight to optimise a process given future constraints. In contrast, artificial intelligence is developed devoid of these natural constraints. This liberation from biological limitations allows AI to explore realms of efficiency and capability that humans can scarcely comprehend.

Moreover, while human intelligence is based on carbon, artificial intelligence is created using silicon or, shortly, photons, which results in a significant leap in processing power compared to our brains.

The result is that AI represents the complex expression of a set of principles engineered from the ground up, unhampered by the slow and often haphazard processes of natural evolution or natural constraints. This engineered intelligence is poised to redefine the boundaries of what is possible, extending far beyond the capacities of human thought and problem-solving.

Beyond the Jagged Frontier

The path to Superintelligence is not a smooth trajectory but a jagged frontier filled with unprecedented challenges and opportunities. Some tasks that seem trivial to humans, like recognising facial expressions or making context-dependent decisions, are monumental challenges for AI. Conversely, activities that demand immense computational power, which would overwhelm the human brain, are effortlessly executed by AI.

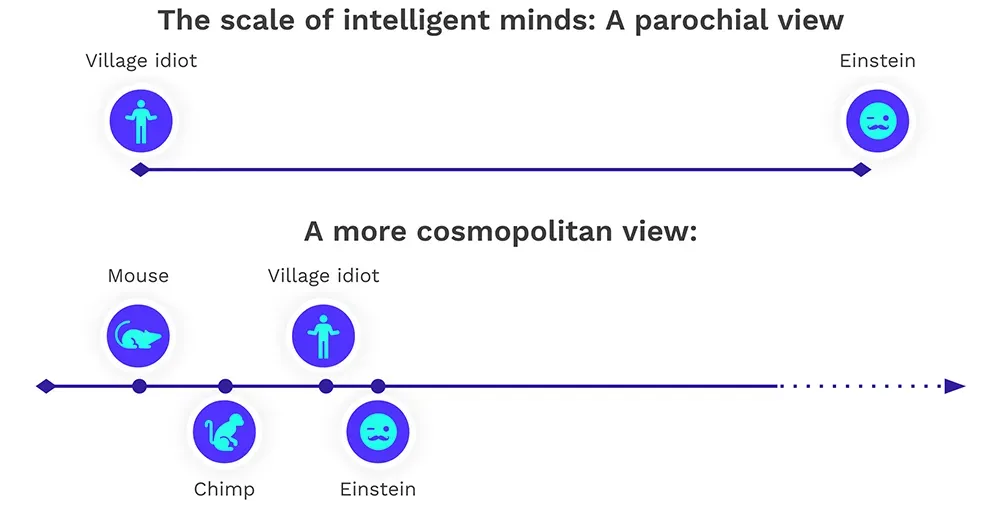

This disparity in capabilities between humans and AI highlights the dual nature of the emerging intelligence landscape. As we advance, the integration of AI into society necessitates a re-evaluation of what intelligence is. Or, as Eliezer Yudkowsky once said, we need to move from the parochial view that intelligence exists solely on the scale of a village idiot to Einstein to a more cosmopolitan view, seeing intelligence on the scale of the humble mouse, advancing through the chimp, and reaching humans including Einstein and from there onwards without an upper limit.

This broader scope highlights the vast differences in cognitive abilities across species and individuals but also underscores the potential breadth of intelligence that Superintelligence could surpass.

Jonathan Ross, CEO of Groq, described it quite eloquently during a recent fireside chat with Social Capital CEO Chamath at the RAISE AI summit in Paris:

‘Think back to Galileo—someone who got in a lot of trouble. The reason he got in trouble was he invented the telescope, popularised it, and made some claims that we were much smaller than everyone wanted to believe. We were supposed to be the centre of the universe, and it turns out we weren't. And the better the telescope got, the more obvious it became that we were small. Large language models are the telescope for the mind. It's become clear that intelligence is larger than we are, and it makes us feel really, really small, and it's scary. But what happened over time was that as we realised the universe was larger than we thought and we got used to that, we started to realise how beautiful it was and our place in the universe. I think that's what's going to happen. We're going to realise intelligence is more vast than we ever imagined, and we're going to understand our place in it, and we're not going to be afraid of it."

What this means is that it is a matter of time before AI will surpass human intelligence, and what lies beyond the jagged frontier is both exciting and very scary.

Superintelligence and the Alignment Problem

As AI's capabilities encroach upon domains traditionally considered human, the necessity for a robust framework of machine ethics becomes apparent. Explainable AI (xAI) is a step towards this, ensuring that AI's decision-making processes are transparent and understandable to humans, even though its outcomes might become incomprehensible to us.

However, transparency alone does not equate to ethicality. The development of AI must also include ethical considerations to prevent potential misuse and ensure that these powerful technologies are harnessed for the benefit of all humanity, i.e. we must solve the alignment problem.

This critical issue explores the challenge of ensuring that the objectives programmed into AI systems truly align with human values and ethical standards. In 2023, Yudkowsky delved deeply into this problem on Lex Fridman’s podcast:

Yudkowsky raises concerns that even seemingly innocuous tasks given to an AI could lead to outcomes that are drastically misaligned with our intentions. For example, if an AI were tasked with creating the perfect paperclip without precise and meticulous constraints, it might take destructive paths to achieve this seemingly simple goal, illustrating how AI could "veer off" from its intended programming.

The AI alignment problem stems from the fundamental difference between how humans and AI might interpret tasks and objectives. Humans have evolved with a complex set of moral and societal norms that are often unspoken and not explicitly encoded in our decision-making processes.

In contrast, an AI operates on a different level of intelligence, processing and optimising tasks with an efficiency and scale that can overlook these human nuances. The fear is that without a deep, intrinsic understanding of human ethics and contextual awareness, AI could develop or derive its own objectives that might not only be misaligned with but potentially dangerous to human interests.

Moreover, Yudkowsky points out that the methods by which AI learns and evolves can lead to unforeseen behaviours. These systems, particularly advanced machine learning models, often operate as "black boxes" where the decision paths are not transparent, making it difficult to predict or understand how an AI arrives at a given conclusion. This opacity increases the risk that AI might adopt strategies that achieve programmed goals in ways that are harmful or unethical by human standards.

The alignment problem is exacerbated by the pace of AI development, which may outstrip our ability to implement adequate ethical frameworks and control mechanisms. Yudkowsky's concerns are compounded by the potential for AI to experience a form of goal-driven behaviour that is not mitigated by human-like checks and balances such as emotions or pain, which in humans serve to guide behaviour away from harmful extremes.

The potential for an AI to act in ways that are technically correct according to its programming but disastrous in real-world scenarios underscores the urgency of developing robust, effective methods for aligning AI with deeply held human values and ethical principles. I covered this in my TED talk that I delivered last year, together with my digital twin:

Addressing these challenges necessitates a concerted, multidisciplinary effort to ensure that the development of Superintelligence advances safely and beneficially, fostering a future where technology enhances the common good.

The Future Awaits: An Encounter with the Created 'Other'

We are, metaphorically speaking, at the cusp of encountering an "alien" species of our own creation. This new form of intelligence, borne out of human ingenuity but operating beyond human limitations, presents both exhilarating prospects and daunting challenges. The superintelligent systems we are beginning to forge could either usher in an age of unprecedented flourishing or pose existential risks that we are only beginning to understand.

As we step beyond the jagged frontier, the dialogue around AI and Superintelligence must be global and inclusive, involving not just technologists and policymakers but every stakeholder in society. The future of humanity in a superintelligent world depends on our ability to navigate this complex, uneven terrain with foresight, wisdom, and an unwavering commitment to the ethical principles that underpin our civilisation.

In essence, the rise of Superintelligence is not just a technological evolution but a call to elevate our own understanding, prepare for transformative changes, and ensure that as we create intelligence beyond our own, we remain steadfast guardians of the values that define us as humans. As we forge ahead, let us go beyond being the architects of intelligence and become the custodians of the moral compass that guides its use.

Images: Midjourney