AI Detection: The Tool That OpenAI Refuses to Release

Is OpenAI really committed to transparency, or is Sam Altman more interested in protecting profits at the expense of academic integrity?

OpenAI has developed a powerful tool that can detect AI-generated text with 99.9% accuracy, yet it remains unreleased. This internal debate over deploying the watermarking technology highlights the tension between transparency and user retention.

Teachers and professors, as well as policymakers and the general public, desperate to combat AI-assisted cheating and misinformation, see this tool as essential. However, surveys show that nearly 30% of ChatGPT users would be deterred if such a tool were implemented. Additionally, concerns arise over the potential disproportionate impact on non-native English speakers and the ease of bypassing the watermark.

Sam Altman, OpenAI’s CEO, has been involved in discussions about the tool but has not pushed for its release. This stance fuels skepticism about Altman’s true motivations. I would argue that despite Altman’s public declarations about wanting to better humanity, his reluctance to release the anti-cheating tool suggests a prioritization of profit over ethical responsibility. This perspective aligns with previous criticisms that Altman’s actions often serve to enhance OpenAI’s market dominance rather than contribute to societal good.

The internal documents reveal that OpenAI’s watermarking technology has been ready for a year, but the company hesitates, citing fears of false accusations and user backlash.

This hesitancy speaks volumes about OpenAI’s internal conflicts. While the technology could significantly improve academic integrity, it could also reduce ChatGPT’s attractiveness to a substantial portion of its user base. Employees advocating for the tool’s release argue that the benefits far outweigh the risks, emphasizing that failing to act diminishes OpenAI’s credibility as a responsible AI developer.

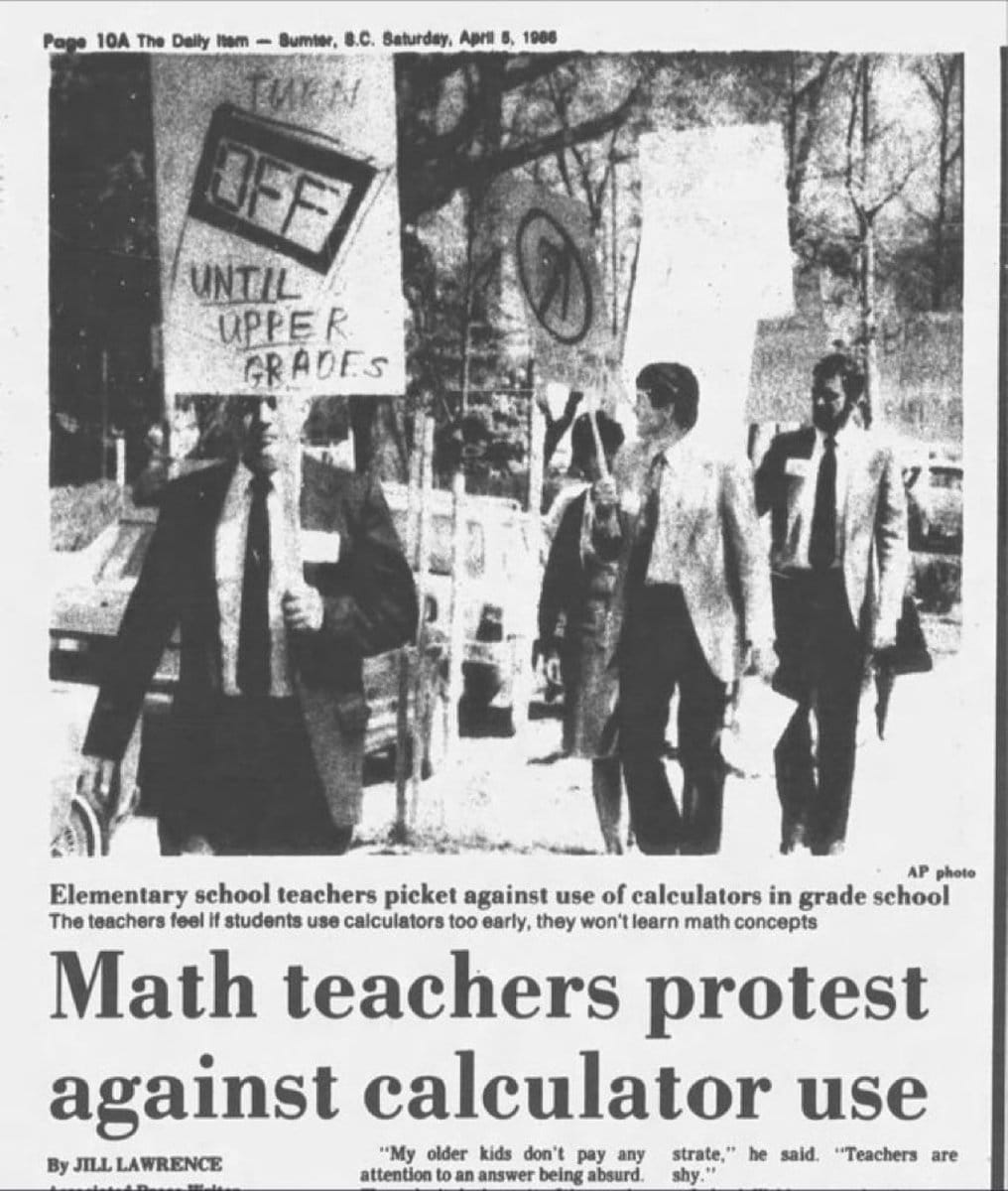

Although the inability to release the tools speaks volumes about Sam Altman, I would also argue there is an urgent need for teachers to be reskilled and actually teach students how to embrace the AI that will define their future. Sure, students are actively using and some use AI to cheat on papers and exams, but instead of bashing students, we should redesign the educational system around AI. Otherwise, it seems history is repeating itself with the introduction of a new technology:

The debate over the anti-cheating tool encapsulates the broader conflict within OpenAI between commercial interests and ethical responsibilities. Should the fear of losing users outweigh the benefits of curbing academic dishonesty? Or does this situation reveal that OpenAI, under Altman’s leadership, is more focused on maintaining its market position than genuinely advancing ethical AI use?

Read the full article in the Wall Street Journal.

----

💡 We're entering a world where intelligence is synthetic, reality is augmented, and the rules are being rewritten in front of our eyes.

Staying up-to-date in a fast-changing world is vital. That is why I have launched Futurwise; a personalized AI platform that transforms information chaos into strategic clarity. With one click, users can bookmark and summarize any article, report, or video in seconds, tailored to their tone, interests, and language. Visit Futurwise.com to get started for free!